Ontology Engineering and Evolution

An ontology is a machine-processable description of the concepts that are relevant in some application domain, e.g., in nanotechnology. It fixes a vocabulary for these concepts, describes their meaning, and captures relevant background knowledge. Ontologies are widely used (and developed) in a variety of knowledge intensive areas, in particular bio-health. They are written in some ontology language, in particular OWL (as standardized by the W3C), which can be regarded as a decidable fragment of first order logic. Various communities have developed repositories of ontologies so that they can be shared, and some have developed quality criteria to spread good practice in ontology design. It makes sense to distinguish two parts of an ontology: the class-level or schema part of an ontology, which introduces terms for concepts, properties, and describes how they are related to each other, and the data- or fact part of an ontology, which contains facts about individual objects and their relations, making use of the terms introduced in the class-level part.

In recent years, various tools have been developed to work with ontologies. We distinguish between

- ontology editors: these are tools that help domain experts capture their knowledge in an ontology, i.e., to write, extend, and modify ontologies (Protégé 4, Web Protégé).

- ontology browsers: these are tools that give users access to ontologies; they can be purpose-built for a specific ontology or generic.

- reasoners: these are highly optimized theorem provers that have been designed for an ontology language, in particular OWL, to make the implicit knowledge in an ontology explicit (FaCT++, ELK, Pellet).

- frameworks to develop ontology-based information systems: this is generic infrastructure that has been developed to build information system where some or all of the domain knowledge is captured inside an ontology (OWL API, OWL Link, Jena, HOBO, etc.)

- others: tools have been developed for ontology-based database access, integrating ontologies with other tools – including Microsoft Excel, merging of ontologies, diffing of ontologies, learning ontologies from text, etc.

Comprehensive nanotechnology ontologies will provide clear advantages for integration, processing and analysis of heterogeneous massive data sets.

In general, ontologies can be used to describe the “meaning” of data. Part of this meaning exists nowadays embedded in business processes, in documentation and in the heads of the users of the data. When it comes to integrating data between organisations, a rationalisation of the data becomes necessary, because the terms and definitions of terms used to describe the data may not be uniform, e.g., the same component may be called differently or different components may unwittingly have the same name. Moreover, as the information about these meanings are in human readable form only, it becomes impossible to apply automated reasoning techniques (which can work on a larger scale) to them. Ontologies go some way into providing information that can be processed by computers and hence can help in the processes of integration, processing and analysis of massive data sets.

NQCG’s Artificial Intelligence strategy involves engineering and use of computational ontologies to integrate and process big data, to provide a unified view (or even several) to a set of heterogeneous data sources, and to users which don’t have a detailed knowledge of these data sources, their schemas, or SQL or other query languages. Using ontologies, we can provide the user with an adaptable, semantically aware query interface that gives them access to these data sources without requiring the above mentioned knowledge (based on mappings from the data sources into the unified schema).

Back to top

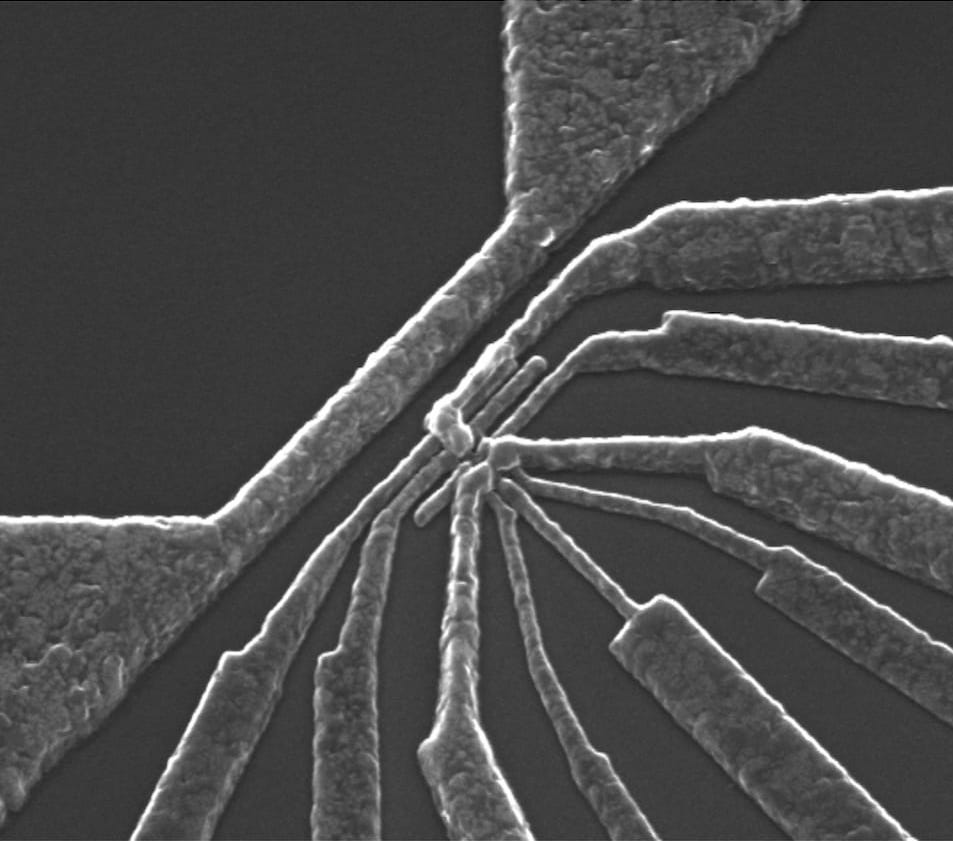

Image Courtesy A. Dzurak, University of New South Wales

Image Courtesy A. Dzurak, University of New South Wales